Interactive Projected Light with Kinect V2 Sensor

Motivation

My first introduction to interactive projection art was an advertisement for Traveler’s Insurance in the Minneapolis/Saint Paul Airport back in 2008, which was 2 years before Microsoft released the Kinect sensor for gaming purposes. It was immediately captivating and maybe the first time that I really felt motivated to learn more about computer science. It would be another 8 years until I finally purchased a used Kinect V2 sensor for PC off eBay for the purpose of making my own interactive art. By that time Microsoft had already discontinued production of the Kinect and was rapidly ending all software support. I knew I would have to act quick to explore the possibilities.

Process

I had dabbled a bit with the Processing programming language before this point, but after a little research it became clear that this would be the easiest way to get some interesting use out of my Kinect. This page and videos by Daniel Schiffman for Processing.org were a helpful starting point. I eventually modeled the start of all my programs off his examples.

The hardest part of getting started wasn’t writing programs for the sensor data, but just getting the darn thing to even be detected by my computer. I found The Open Kinect Project, which Schiffman links on his page, as a critical aid in getting the right drivers installed on my computer.

In particular, I needed to install Microsoft’s Kinect SDK, which can be found at this link. As many of the pages and links at this site are keen to remind you, support for these resources has ended. I found that discouraging and wasn’t sure I was going to make any headway, but soldiered on.

In Processing I wasn’t able to get any of the examples working for the various libraries I added, including “Kinnect4WinSDK” and “Open Kinect for Processing.” The issue log on Schiffman’s github for the project might offer some solutions, but I didn’t dig too deep. I was only able to get Schiffman’s “Kinect v2 for Processing” examples to work after installing the libusbK driver. These are the directions from https://github.com/OpenKinect/libfreenect2

Installing libusbK driver:

You don't need the Kinect for Windows v2 SDK to build and install libfreenect2, though it doesn't hurt to have it too. You don't need to uninstall the SDK or the driver before doing this procedure.

Install the libusbK backend driver for libusb. Please follow the steps exactly:

1. Download Zadig from http://zadig.akeo.ie/.

2. Run Zadig and in options, check "List All Devices" and uncheck "Ignore Hubs or Composite Parents"

3. Select the "Xbox NUI Sensor (composite parent)" from the drop-down box. (Important: Ignore the "NuiSensor Adaptor" varieties, which are the adapter, NOT the Kinect) The current driver will list usbccgp. USB ID is VID 045E, PID 02C4 or 02D8.

4. Select libusbK (v3.0.7.0 or newer) from the replacement driver list.

5. Click the "Replace Driver" button. Click yes on the warning about replacing a system driver. (This is because it is a composite parent.) To uninstall the libusbK driver (and get back the official SDK driver, if installed):

6. Open "Device Manager"

7. Under "libusbK USB Devices" tree, right click the "Xbox NUI Sensor (Composite Parent)" device and select uninstall.

8. Important: Check the "Delete the driver software for this device." checkbox, then click OK. If you already had the official SDK driver installed and you want to use it:

9. In Device Manager, in the Action menu, click "Scan for hardware changes." This will enumerate the Kinect sensor again and it will pick up the K4W2 SDK driver, and you should be ready to run KinectService.exe again immediately. You can go back and forth between the SDK driver and the libusbK driver very quickly and easily with these steps.

After all this, I was able to finally get meaningful data from the Kinect sensor in to Processing and create some visual outputs. At this point I took the next step and invested in a cheap projector, so the visuals wouldn’t be stuck on my computer screen. I bought a 2400 lux Vankyo projector off Amazon for $99. It didn’t have a very good throw distance, which meant my project would have to be small scale (interaction with hands, not body). The leap to a better projector was too much cost to bear and since I planed to install this project in the desert at Burning Man, I didn’t want to get anything too nice. The final interaction area was about 2x4 feet and at that scale the image still looked acceptably decent.

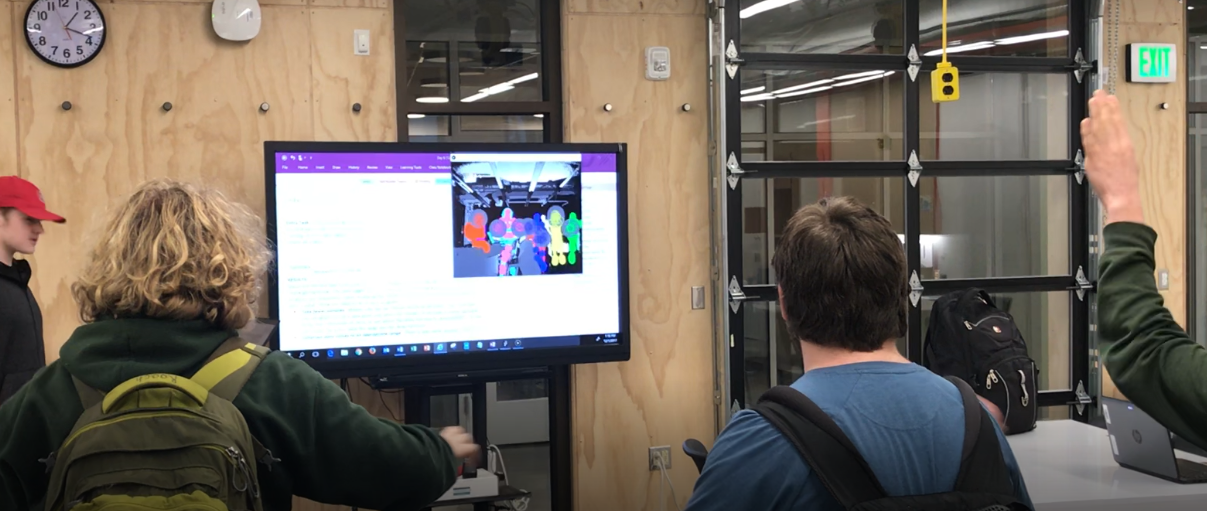

I laser cut a sloppy rig to mount the Kinect and projector next to each other, then set about recreating the type of project I’d most commonly seen done with this set up: a real time topographical projection that interacted with changes in elevation of the environment below. The pictures below include an installation I saw in the MIT Media Lab in the summer of 2019 using a Kinect V1 sensor.

It wasn’t too difficult to replicate the basics of this project, so I built off of it to create a music sequencer. A white bar would scan across the interactive area and if it passed over a circular object (made from PVC pipe sections) it would play a sound. The sound that was played was determined by the height of the object and the pitch of the sound was changed by placing the object higher or lower in the interactive plane (this can be seen in the video at the top of the page). This project taught me a lot of things that were helpful for future programs. In particular, how to sync up the depth data to the size of the projected image. The Kinect actually sees a much wider area than the projector is able to cover, so all my programs only use a little rectangle of this area. I included in the code a way to calibrate the position of this rectangle using the keyboard. When ever I moved the Kinect/projector set up, I would always run this program first to help align the two.

Since this was my first program written for the Kinect project, and the most involved I had written in Processing up to that point, it isn’t pretty, but it got the job done. I’ve shared it at the bottom of the page.

In addition to this intro project I made the following experiences (many can be seen in the video):

1) A grassy field with calm meadow sounds playing. When you move your hand over the grass a butterfly object is spawned every half second or so. Once spawned, a butterfly flits about randomly. There is a maximum number of butterflies that can be spawned so once a new one is created over that limit, the oldest one fades away.

2) The program begins with a black screen. When an object is placed over the interactive space it changes the color of that pixel permanently, depending on the height of the object. There are four height ranges, corresponding to four different colors. The four colors are randomly changed every 10 seconds (not changing what was already painted, but any new changes made will be in the new pallet).

3) A face mixing game created from pictures of my Burning Man camp mates. Hovering your hand over left and right arrows allow the user to scroll through different face parts (nose down, nose to forehead, and forehead up).

4) A image of a mysterious temple door is displayed, surrounded by 4 runes. Creepy wind noises play in the back ground. Holding your hand above the runes creates a harmonic tone. Each rune makes a different tone. If all four runes are covered at once, the doors of the temple open, revealing a random, whimsical image. Uncovering any of the runes causes the doors to close again. If they shut all the way, another image is loaded behind the door. Images included Trump, a tardigrade, Sasquatch, a kitten, pin up models, momo, etc.

5) A solid background image of The Black Rock Lighthouse Service (Burning Man 2016) is displayed while gentle new-age music plays. Moving your hand creates a trail of connected triangles, each a different color. Moving your hand closer to the table makes the triangles generate slower, reducing their size, and moving your hand higher increases the time between each triangle being drawn, making them bigger. A maximum number of triangles is allowed so after that number is reached any new triangles causes the oldest one to fade away.

6) A dry desert landscape is silently displayed. As you wave your hand over the landscape attractive men begin to fall downwards and “It’s Raining Men” by The Weather Girls begins to play. The more men on the screen, the louder the song. If all men are allowed to fall off the screen, the desert goes silent again. Holding your hand close to the table creates large men that move quickly, while raising your hand upwards creates tiny men that fall slowly.

7) The table appears to be a blank white image, but regimenting music plays. As you move your hand over the table it looks like you are removing frost or white dust from a hidden image. The longer keep your hand in one area or the closer to the table you bring it, the more exposed the image becomes in that area. Once you remove your hand, the uncovered areas slowly frost back over, hiding the image. The hidden image is randomly changed every 20 seconds.

8) A dusky landscape is displayed with three vertical lines, evenly spaced. Moving your hand upwards along any of the lines causes the line to split open at the top, bending outwards into a recursive fractal tree. If you hand is brought all the way from the bottom to the top, the tree opens to its maximum, folding into a square. When the trees are open a sound like dry bamboo chimes can be heard. The further open the trees, the louder the sound. When the trees are closed, no sound is heard.

9) Six white, sinister looking cubes slowly rotate in 3D space against a background of stars. If you move your hand over a cube it changes to red and a scary, sci-fi sound is played. Each cube plays a different sound.

10)A static image is displayed that appears to spiral into the center of the table. The sound of running water can be heard. Moving your hand over the table creates a white bubble, that spirals in towards the center of the image, getting smaller and smaller until it can no longer be seen. Moving your hand higher or lower causes the bubbles to spawn at different rates.

After successfully installing this project at Burning Man 2019, I brought it home and installed in in my high school classroom. Unfortunately, my computer that had most of the programs described above was stolen during a series of burglaries. I only have the music sequencer program (because I made it using my desktop computer before buying the projector) and the raining men and castle door (I had removed them from the laptop because they had some NSFW images). I also lost a nice template file that I had created, which I would have loved to share with you. Darn crooks.

Example Code

/* Music Sequencer for Kinect V2 + Projector This Processing program matches the data from a Kinect V2 sensor to a projector. When circular obejct of a particular height are detected (indicated by a white bar scanning across the interactive/projected area) a sound is played. The pitch of the sound is changed by moving the obejct higher or lower in the interactive area. Johnny Devine 2019 */ import org.openkinect.processing.*; import processing.sound.*; Kinect2 kinect2; Scanner scanner; SoundFile soundfile2; SoundFile soundfile3; SoundFile soundfile4; int progStartTime; //used to delay event detection when starting int lastTime; //used to move scanner across screen int tempo = 20; //used to determine speed of scanner //These are the depth values that defined the differences in sound/color int layerNumber = 4; int lowBound = 55; int highBound = 69; int pixelColor; float skip = 10; int radius = 8; int border = 2; float sensitivity = 0.02; //These are the pixels that define the portion of the Kinect image that should be mapped to the projector int xMin = 131; int xMax = 383; int yMin = 147; int yMax = 300; //arrays to store depth data, color, individual pixel information for each dimension, whether a triggering evnet was detected, etc. float[] bOnePrevious = new float[217088]; float[] bTwoPrevious = new float[217088]; int[] pixelColors = new int[(xMax - xMin +1)*(yMax - yMin +1)]; int[] pixelX = new int[(xMax - xMin +1)*(yMax - yMin + 1)]; int[] pixelY = new int[(xMax - xMin + 1)*(yMax - yMin + 1)]; int[] events = new int[xMax - xMin + 1]; //can i make this xMax - xMin + 1? int[] modifiers = new int[xMax - xMin + 1]; //to adujst pitch of sounds int event1 = 0; int event2 = 0; int event3 = 0; int event4 = 0; int lastEvent1 = 0; int lastEvent2 = 0; int lastEvent3 = 0; int lastEvent4 = 0; //defined height ranges for different sounds/colors int boundDelta = highBound - lowBound; int firstLayer = lowBound + boundDelta/layerNumber; int secondLayer = lowBound + 2 * boundDelta/layerNumber; int thirdLayer = lowBound + 3 * boundDelta/layerNumber; int fourthLayer =lowBound + 4 * boundDelta/layerNumber; void setup() { fullScreen(2); //This sends the visual output to the second monitor, in this case the projector //size(512, 424); //This is the number of pixels returend by the kinect by default kinect2 = new Kinect2(this); scanner = new Scanner(); kinect2.initDepth(); kinect2.initDevice(); lastTime = millis(); progStartTime = millis(); //Images and sounds used by the program need to be in the same folder or a sub folder named 'data' soundfile2 = new SoundFile(this, "harp-short-12.wav"); //soundfile3 = new SoundFile(this, "harp-short-11.wav"); soundfile3 = new SoundFile(this, "harp-short-10.wav"); soundfile4 = new SoundFile(this, "chimes-d-3-f-1.wav"); } void draw() { background(0); //Have the Kinect look at what is in front of it //Then define that depth data as a 'brightness' at each pixel //Based on the brightness of the pixel, draw a rectangle at that location with the appropriate color PImage img = kinect2.getDepthImage(); //image(img,0,0); //enable to help align projector int tempIndex = 0; for (int y = yMin; y < yMax; y += 1){ for (int x = xMin; x < xMax; x += 1) { int index = x + y * img.width; float b = brightness(img.pixels[index]); //calculate the average of the last three b values. Slows things down, but makes for less random flashing b = (b + bOnePrevious[index] + bTwoPrevious[index])/3; bTwoPrevious[index] = bOnePrevious[index]; bOnePrevious[index] = b; pixelColors = colorHandler(b, tempIndex, pixelColors); pixelX[tempIndex] = x - xMin; //subracting min values makes these start at zero pixelY[tempIndex] = y - yMin; noStroke(); rect(map(x,xMin,xMax,1920,0),map(y,yMin,yMax,0,1080),skip,skip); tempIndex++; } } //Update the scanner() obejec so it moves its position across the screen. //The scanner is looking for trigger events at each frame and will play a sound if the conditions are met. //See the Scanner class for details. scanner.scan(); scanner.display(); if(millis() > 3000){ scanner.checkEvents(); } scanner.playEvents(); scanner.clearModifiers(); } //Determine color of rectangle based on brightness of //depth image pixel and monitor event progress int[] colorHandler(float b, int i, int[] pixs){ //Make black if too far or close if (b >= highBound || b <= lowBound){ fill(0); pixs[i] = 0; } //Closest to the camera: white snow caps else if (b > lowBound && b <= firstLayer) { fill(254, 254, 254); pixs[i] = 4; } //Second closest to camera: rocky highlands else if (b > firstLayer && b <= secondLayer) { //fill(204,102,0); fill(255,0,0); pixs[i] = 3; } //Thirdy closest to the camera: green forest else if (b > secondLayer && b <= thirdLayer) { //fill(100,100,0); fill(0,255,0); pixs[i] = 2; } //Fourth closest to the camera: blue ocean else if (b > thirdLayer && b <= fourthLayer) { fill(0,0,255); pixs[i] = 1; } return pixs; } //Used to calibrate position between kinect image and projection void keyPressed(){ //lowBound adjusts the boudary closer to the kinect. //Increasing moves the boundary further from the camera. if(key == 'q'){ lowBound += 1; println("lowBound = " + lowBound); } else if(key == 'a'){ lowBound -= 1; println("lowBound = " + lowBound); } //highBound adjusts the boundary farther away from the kinect. //Increasing makes the boundary further away. if(key == 'w'){ highBound += 1; println("highBound = " + highBound); } else if(key == 's'){ highBound -= 1; println("highBound = " + highBound); } else if(key == 'e'){ xMax += 1; println("xMax = " + xMax); } else if(key == 'd'){ xMax -= 1; println("xMax = " + xMax); } else if(key == 'r'){ xMin += 1; println("xMin = " + xMin); } else if(key == 'f'){ xMin -= 1; println("xMin = " + xMin); } else if(key == 't'){ yMax += 1; println("yMax = " + yMax); } else if(key == 'g'){ yMax -= 1; println("yMax = " + yMax); } else if(key == 'y'){ yMin += 1; println("yMin = " + yMin); } else if(key == 'h'){ yMin -= 1; println("yMin = " + yMin); } }

This is the Scanner class used in the code above:

class Scanner { //variables int x; int y; //constructor Scanner(){ x = xMin; y = yMin; } //functions void scan(){ if(millis() - lastTime >= tempo){ x++; lastTime = millis(); } if(x > xMax){ x = xMin; clearEvents(); } } void display(){ noStroke(); fill(127,90); rect(map(x,xMin,xMax,1920,0),map(y,yMin,yMax,0,1080), 2*skip, (yMax-yMin)*skip); } void playEvents(){ if(events[x - xMin] == 2){ float rate = map(float(modifiers[x-xMin]),0.0,float(yMax-yMin),0.5,1.5); soundfile2.play(); soundfile2.rate(rate); } if(events[x - xMin] == 3){ float rate = map(float(modifiers[x-xMin]),0.0,float(yMax-yMin),0.5,1.5); soundfile3.play(); soundfile3.rate(rate); } if(events[x - xMin] == 4){ float rate = map(float(modifiers[x-xMin]),0.0,float(yMax-yMin),0.5,1.5); soundfile4.play(); soundfile4.rate(rate); soundfile4.amp(.3); } } void checkEvents(){ for(int i = 0; i < (yMax - yMin); i++){ //check all pixels in that column if(pixelColors[x - xMin + i*(xMax-xMin)] == 2 && pixelY[x - xMin + i*(xMax-xMin)] > 2*radius + border && pixelY[x - xMin + i*(xMax-xMin)] < (yMax-yMin) - (2*radius + border) && pixelX[x - xMin + i*(xMax-xMin)] > 2*radius + border && pixelX[x - xMin + i*(xMax-xMin)] < (xMax - xMin) - (2*radius + border)){ //put an if statement here to exlude pixels within a certain distance of already recorded events boolean otherEvents = false; //for(int a = pixelX[x - xMin + i*(xMax-xMin)] - radius; a < pixelX[x - xMin + i*(xMax-xMin)] + radius; a++){ for(int a = -radius*2; a < radius; a++){ //println("x position is " + pixelX[x - xMin + i*(xMax-xMin)] + " and a value is " + a); if(events[pixelX[x - xMin + i*(xMax-xMin)]+ a] == 2){ otherEvents = true; } } //println(otherEvents); if(otherEvents == false){ float true2 = 0; float false2 = 0; //Look at every pixel in a rectangle that is two radi further in x and one radius up and down in the y for(int yCheck = 0; yCheck < 2*radius; yCheck++){ for(int xCheck = 0; xCheck < 2*radius; xCheck++){ if(pixelColors[(x - xMin + xCheck) + (i + yCheck)*(xMax-xMin)] == 2){ true2++; } else{ false2++; } } } float trueRatio = true2/false2; //if(trueRatio - sensitivity > 0.65 && trueRatio + sensitivity < 0.82){ if(Math.abs(trueRatio - 0.785) < sensitivity){ //println("Event added at x = " + pixelX[x - xMin + i*(xMax-xMin)] + " with a trueRatio of " + trueRatio); events[pixelX[x - xMin + i*(xMax-xMin)]] = 2; modifiers[pixelX[x - xMin + i*(xMax-xMin)]] = pixelY[x - xMin + i*(xMax-xMin)]; //maybe map yTemp value to something like 1-100 } } } } for(int i = 0; i < (yMax - yMin); i++){ //check all pixels in that column if(pixelColors[x - xMin + i*(xMax-xMin)] == 3 && pixelY[x - xMin + i*(xMax-xMin)] > 2*radius + border && pixelY[x - xMin + i*(xMax-xMin)] < (yMax-yMin) - (2*radius + border) && pixelX[x - xMin + i*(xMax-xMin)] > 2*radius + border && pixelX[x - xMin + i*(xMax-xMin)] < (xMax - xMin) - (2*radius + border)){ //put an if statement here to exlude pixels within a certain distance of already recorded events boolean otherEvents = false; for(int a = -radius*2; a < radius; a++){ if(events[pixelX[x - xMin + (i)*(xMax-xMin)]+ a] == 3){ otherEvents = true; } } //println(otherEvents); if(otherEvents == false){ float true3 = 0; float false3 = 0; //Look at every pixel in a rectangle that is two radi further in x and one radius up and down in the y for(int yCheck = 0; yCheck < 2*radius; yCheck++){ for(int xCheck = 0; xCheck < 2*radius; xCheck++){ if(pixelColors[(x - xMin + xCheck) + (i + yCheck)*(xMax-xMin)] == 3){ true3++; } else{ false3++; } } } float trueRatio = true3/false3; //if(trueRatio - sensitivity > 0.65 && trueRatio + sensitivity < 0.82){ if(Math.abs(trueRatio - 0.785) < sensitivity){ //println("Event added at x = " + pixelX[x - xMin + i*(xMax-xMin)] + " with a trueRatio of " + trueRatio); events[pixelX[x - xMin + i*(xMax-xMin)]] = 3; modifiers[pixelX[x - xMin + i*(xMax-xMin)]] = pixelY[x - xMin + i*(xMax-xMin)]; //maybe map yTemp value to something like 1-100 } } } } for(int i = 0; i < (yMax - yMin); i++){ //check all pixels in that column if(pixelColors[x - xMin + i*(xMax-xMin)] == 4 && pixelY[x - xMin + i*(xMax-xMin)] > 2*radius + border && pixelY[x - xMin + i*(xMax-xMin)] < (yMax-yMin) - (2*radius + border) && pixelX[x - xMin + i*(xMax-xMin)] > 2*radius + border && pixelX[x - xMin + i*(xMax-xMin)] < (xMax - xMin) - (2*radius + border)){ //put an if statement here to exlude pixels within a certain distance of already recorded events boolean otherEvents = false; for(int a = -radius*2; a < radius; a++){ if(events[pixelX[x - xMin + (i)*(xMax-xMin)]+ a] == 4){ otherEvents = true; } } //println(otherEvents); if(otherEvents == false){ float true4 = 0; float false4 = 0; //Look at every pixel in a rectangle that is two radi further in x and one radius up and down in the y for(int yCheck = 0; yCheck < 2*radius; yCheck++){ for(int xCheck = 0; xCheck < 2*radius; xCheck++){ if(pixelColors[(x - xMin + xCheck) + (i + yCheck)*(xMax-xMin)] == 4){ true4++; } else{ false4++; } } } float trueRatio = true4/false4; //if(trueRatio - sensitivity > 0.65 && trueRatio + sensitivity < 0.82){ if(Math.abs(trueRatio - 0.785) < sensitivity){ //println("Event added at x = " + pixelX[x - xMin + i*(xMax-xMin)] + " with a trueRatio of " + trueRatio); events[pixelX[x - xMin + i*(xMax-xMin)]] = 4; modifiers[pixelX[x - xMin + i*(xMax-xMin)]] = pixelY[x - xMin + i*(xMax-xMin)]; //maybe map yTemp value to something like 1-100 } } } } } void clearEvents(){ for(int i = 0; i < events.length; i++){ events[i] = 0; } } void clearModifiers(){ for(int i = 0; i < modifiers.length; i++){ modifiers[i] = 0; } }